As we start to turn the page into 2021, a change I’m sure most people are eager to get to in light of World events this year, it’s time to take a look at what B2B SEO practitioners should be planning for to stay current and take advantage of emerging trends. As we evaluate the changes that took place in 2020 and the expectations for 2021 in order to craft a winning SEO strategy, segmenting activities into the following categories is helpful to put a framework around our planning:

- Technical SEO

- SEO Content Strategy

- Universal Search

- Video

- Voice

- Structured Data

In this first of six articles corresponding to each of these categories, we will take a look at some of the SEO priorities 2021 that should be top of mind for B2B companies who want to ensure they are competitive and staying ahead of their competition.

2021 Technical B2B SEO Priorities

Technical SEO is a wide field of study with numerous potential areas of concern depending on the size of your site, how complex it is, and what JavaScript you made be using. However, some priorities for the new year are consistent for all sites. Here are my Top 5 B2B Technical SEO priorities for 2021:

1) Improve Google Core Vitals

If you haven’t heard yet, Google intends to make Core Vitals a ranking factor in May of 2021 and have given Webmasters and site owners notice to make necessary changes now or suffer the consequences later. This is true of Core Vitals and some other Usability factors that are all being put under the umbrella of Page Experience. Here is the recent Google blog post that references the upcoming changes and all of the issues that need to be addressed.

Some of the issues are straightforward like ensuring your site is secure. For some sites, ensuring that the site is mobile friendly and has the same content for mobile and desktop users is also straightforward while some folks will have some challenges to work out to comply with that directive. But in many more cases, the big obstacle to Page Experience success will be to address Core Vitals and page speed.

The following definition of Core Vitals is taken directly from this page on Google’s site:

https://web.dev/vitals/

Core Web Vitals

Core Web Vitals are the subset of Web Vitals that apply to all web pages, should be measured by all site owners, and will be surfaced across all Google tools. Each of the Core Web Vitals represents a distinct facet of the user experience, is measurable in the field, and reflects the real-world experience of a critical user-centric outcome.

The metrics that make up Core Web Vitals will evolve over time. The current set for 2020 focuses on three aspects of the user experience—loading, interactivity, and visual stability—and includes the following metrics (and their respective thresholds):

Largest Contentful Paint threshold recommendationsFirst Input Delay threshold recommendationsCumulative Layout Shift threshold recommendations

Largest Contentful Paint (LCP): measures loading performance. To provide a good user experience, LCP should occur within 2.5 seconds of when the page first starts loading.

First Input Delay (FID): measures interactivity. To provide a good user experience, pages should have a FID of less than 100 milliseconds.

Cumulative Layout Shift (CLS): measures visual stability. To provide a good user experience, pages should maintain a CLS of less than 0.1.

For each of the above metrics, to ensure you’re hitting the recommended target for most of your users, a good threshold to measure is the 75th percentile of page loads, segmented across mobile and desktop devices.

Improving the scores for each of these new metrics can be a very complex endeavor that requires a deep technical understanding of your code, especially for enterprise level sites with multiple CMS platforms and page templates. It will undoubtedly require IT resources to improve and will require ongoing attention and education as these metrics are subject to change or evolve.

This is going to be a resource intensive initiative for many companies and should be planned for and budgeted for immediately as a priority for your 2021 SEO program.

2) Ensure all of your content is being indexed by Search Engines

Not just your actual URLs although that is obviously the top priority, but also ensure that all the content of your pages is being indexed and that you aren’t being affected by partial indexing. Partial indexing can happen at the block level meaning that certain areas of the page are not being indexed either because they are hidden from Google based on the way they are implemented or in some cases, because of low quality.

Using the cache command in Google is no longer a good way to check for indexing because it doesn’t render everything the engine sees, especially content created in Javascript. Once upon a time the cache command could be used to understand the difference between what was on your page and what Google was able to see. However, that is no longer the case.

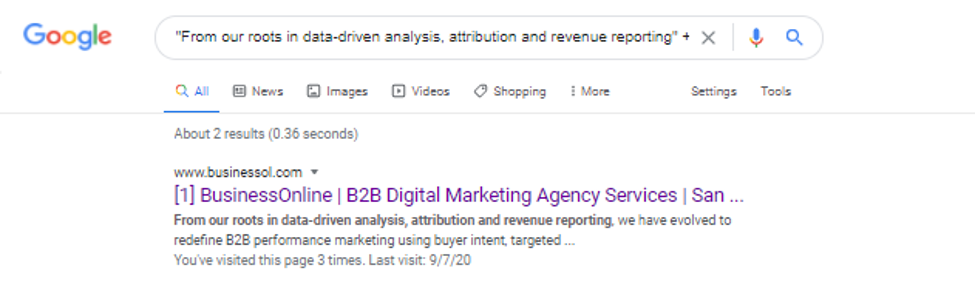

Rather than the cache command, use a combination of a unique string of text and on your page and the site command:

“Unique string of text in quotes” + site:www.yoursite.com

Here is an example for BusinessOnline:

It’s good to see that our homepage content is correctly indexed 🙂

For a more accurate assessment that is a bit more complicated, you can use another methodology that replies on Google Search Console to render the page:

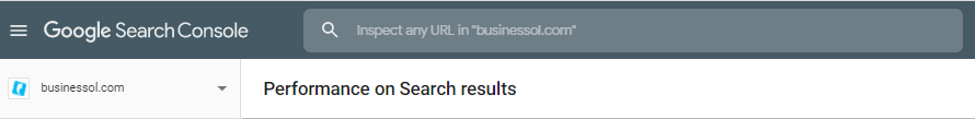

Use the inspect URL function for the URL you wish to evaluate:

Assuming your URL is indexed, you will see the following message:

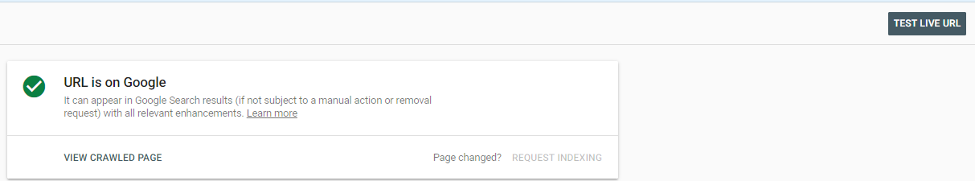

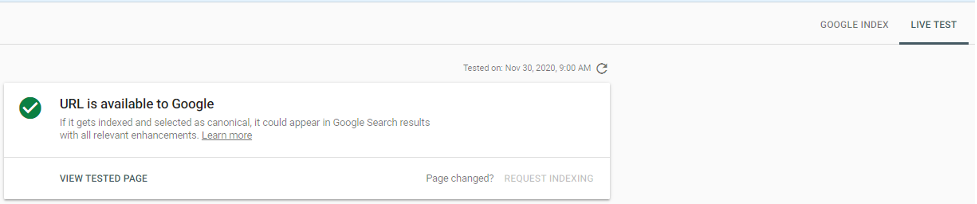

Click on the Test Live URL button and you should get the following message:

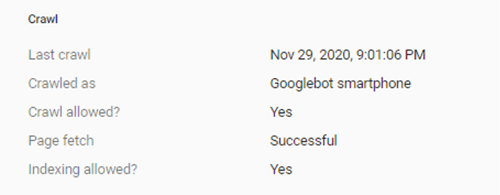

Keep in mind this will give you up to date results but may or may not reflect what Google has in their system currently. If you have recently updated your page, you can check the crawl date to see when Google crawled your page last:

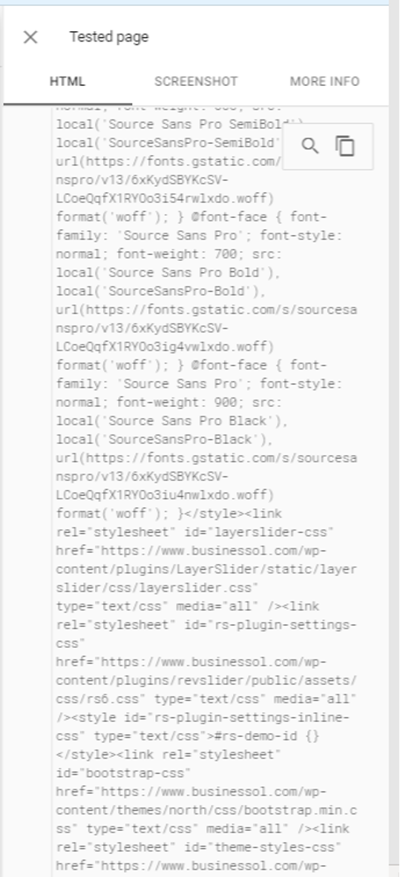

Click the View Test Page link. What you will see is that the right hand margin will populate with the rendered HTML code:

Do a Control F in this section and search for the string of content that you are testing. If you find the text, it has been indexed.

This method can be used to evaluate Javascript indexing issues and partial indexing issues. Some other alternatives for sites you might not have GSC access to can be found here:

https://www.tldrseo.com/fetch-and-render-alternatives/

Fixing these issues is a long and complicated topic that exceeds the available space in this post but suffice to say that it requires a knowledgeable and experienced professional.

In terms of 2021 planning and priorities, you should ensure that all of your current content is being correctly indexed. Prioritize by importance and ensure you evaluate all of the different templates that exist within your site. Ensure that you have time set aside throughout the year to continually evaluate your content and how it is being indexed as well as ensuring that all of your URLs have been indexed as well.

3) Eliminate duplicate content issues

This is another potentially complex area of focus. Most of the duplicate content issues that I see are a result of redundant URL paths to the same content. In many cases, this situation has been created inadvertently. In general, you should ensure that the following best practices have been observed:

- Only one URL path to each unique piece of content whenever possible.

- If there is more than one path, use the preferred URL consistently in all internal links, canonical tags, href lang tags, XML sitemap and HTML sitemap.

- Use lowercase letters only in URL strings.

- Use redirects when possible to eliminate ambiguity. Most common use cases include any URL with capitalization to lowercase only version, non www to www or vice versa depending on preference, and http to https.

- Put canonical tags on all pages of your site and ensure that they are consistent with your preferred URL for each page and that reference absolute URLs.

- Use the parameter exclusion functionality in Search Console if applicable to ignore campaign tags or other unnecessary dynamic parameters.

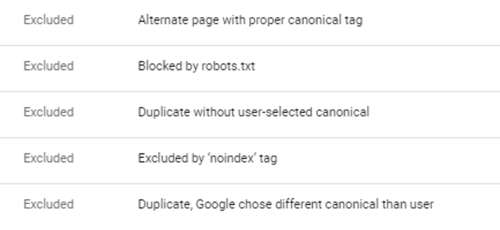

You should be consistently looking at Google Search Console to monitor what URLs Google is indexing and which they are excluding to identify any unintended duplicate content problems or issues.

This is especially true for the Coverage Section under Excluded within the following categories:

The pages in the Alternate page with proper canonical section should show you pages that have correctly assigned different canonical URLs. You will want to validate that that is the case. In the case of Blocked by Robots.txt and Excluded by noindex tag, you are looking there in the event you have intentionally excluded URLs to avoid duplicate content which should typically be a last resort. Keep in mind that just because something is blocked by the robots.txt file does not mean it is invisible to Google but only that it will not be discovered via a standard crawl of your site. Google can still find those pages from external links. The noindex tag is the better option to ensure that Google will not index a page.

The Duplicate without user-selected canonical and the Duplicate, Google chose different canonical than user categories are especially important to evaluate and correct in order to prevent duplicate content issues.

In terms of 2021 planning, an end of the year technical audit to identify any duplicate content issues so that they can be put on the radar for next year is recommended. Consistent time should be allotted on a monthly basis to evaluate the state of the site specific to these issues. The larger and more complex the site, the more often the site should be monitored.

4) Fix Broken Things That Affect SEO

Here is a list of things you can do to clean up the broken things on your site. These issues should be evaluated at least on a quarterly basis.

- Eliminate as many 404 errors as possible by using a 301 redirect to point them towards a suitable user experience on your site.

- Fix any broken links on your site, both internal and external.

- Make sure that all pages of your site have at least one link that points to somewhere else on your site. This is especially true for PDF documents.

- Fix Schema Errors. We will cover this in greater detail in a subsequent article but in the meantime, make sure you are checking the Enhancements section of Google Search Console to see what Schema based enhancements Google has recognized for your site and what errors they might have.

- Ensure that all page titles and meta descriptions are unique on your site.

- Ensure all redirects on your site are 301 and not 302 unless the redirect really is temporary. Yes 302 redirects pass Page Rank and yes Google will sometimes treat them as 301s anyway, but often times the original URL will continue to appear in the index with a 302 redirect which is most often not the desired outcome. For the sake of consistency and consistent results, always use 301 redirects for permanent redirection.

- Make sure your heading tags correctly summarize the content blocks of your page. It doesn’t really matter if you use H1 or a different version and multiple H1 tags is not an issue. However, your heading tags should make sense in the context of providing users a coherent outline of the content that lives on the page. Doing so provides Google with valuable context for your page. This is more of an on page issue than technical but issues related to H tags are often flagged in technical reports.

- Fix any Mobile Usability Errors flagged in Search Console including blocked resources that may cause Google issues with fully rendering your page:

- Avoid the use of nofollow tags unless they are implemented on links from user generated content or they are on external links that cannot vouch for (although that begs the question as to why those links exist in the first place). Page Rank sculpting has been dead for years and there is no reason to have no follow links on internal links, especially on your own blog pages that link within your site (a reasonably common error). You may want to install a nofollow Chrome extension to quickly identify such issues:

https://chrome.google.com/webstore/detail/nofollow/dfogidghaigoomjdeacndafapdijmiid?hl=en - The rel=next and rel=prev tags have been depreciated by Google and are not sufficient to resolve all issues with regard to your pagination strategy. :

https://searchengineland.com/google-no-longer-supports-relnext-prev-314319

Bing still uses the tags for page discovery but not page merging:

https://www.seroundtable.com/how-bing-uses-rel-next-and-rel-prev-27298.html

You SHOULD NOT canonical multiple pages with pagination back the root page. Rather let Google index all of your paginated pages. In the event you want to limit keyword cannibalization or prevent page 2 or beyond from outranking page 1 of your paginated pages, try using sub optimal page titles. You want to ensure that Google can use these pages to find all of the links that might appear on those pages, especially in ecommerce results. - Fix any pages that are generating 500 level server errors. These can usually be found in the Coverage section of Google Search Console.

- Eliminate redirects with multiple hops as much as possible. Ideally all redirects should resolve to their final destination on the initial redirect.

In terms of 2021 planning, you should budget for ongoing monitoring and resolution of these issues on a consistent basis. The larger and more complex your site, the more often you will want to be on the lookout for problems of this nature. This is especially true for Enterprise B2B companies that have a lot of change or have multiple CMS systems and / or microsites. It is very common for blogs to have a unique CMS. In those cases a separate technical audit should be done specific to the blog.

5) Minimize Depth of Content and Maximize Internal Linking Signals

While this topic is not specific to technical SEO, it has the potential to be very impactful. This is the most relevant of the six topics in this series so I thought I would include it here.

Let me preface this section with the following excerpt from Search Engine Journal which summarizes John Mueller who is one of Google’s chief SEO evangelists:

“In Mueller’s response, he stated that the number of slashes in a URL does not matter.

What does matter is how many clicks it takes to get to a page from the home page.

If it takes one click to get to a page from the home page, then Google would consider the page more important. Therefore it would be given more weight in search results.

On the other hand, Google would see a page as being less important if it takes several clicks to visit after landing on the site’s home page.“

This quote is taken from the following page:

https://www.searchenginejournal.com/google-click-depth-matters-seo-url-structure/256779/

Optimizing your internal link structure is predicated on ensuring that your most important content is as close to your home page as possible in terms of the number of clicks it takes to find it. This requires properly leveraging links in your navigation menu, in your content, in your footer, breadcrumb links and anywhere else that it makes sense for users to create link connectivity.

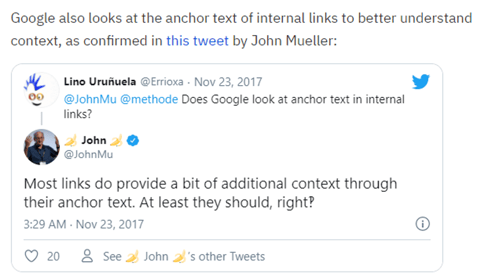

Additionally optimizing your internal link structure requires implementing descriptive anchor text that contains some relevant keyword specificity. Anchor text is still utilized by Google as a ranking factor:

It’s important to use keyword focused anchor text but only in a way that is relevant within the context of the page and you should vary the way you link to any one page in terms of content links. It’s also important to recognize that Google understand semantic relationships better than it ever has before so it isn’t necessary to constantly use the same keyword for all links that point to the same page. Making things as relevant as possible for the end user is the best recommendation I can give. That includes optimizing the text that surrounds your internal links as well.

You should also ensure that every page of your site has at least one internal link that points to it. This is referred to as eliminating orphan pages.

As previously mentioned, most links should not have a no follow tag with the following exceptions for which Google has expanded their vocabulary of tags to accommodate:

- rel=”sponsored”: Use the sponsored attribute to identify links on your site that were created as part of advertisements, sponsorships or other compensation agreements.

- rel=”ugc”: UGC stands for User Generated Content, and the ugc attribute value is recommended for links within user generated content, such as comments and forum posts.

- rel=”nofollow”: Use this attribute for cases where you want to link to a page but don’t want to imply any type of endorsement, including passing along ranking credit to another page

More information can be found here:

https://developers.google.com/search/blog/2019/09/evolving-nofollow-new-ways-to-identify

Additionally, for a deeper dive into maximizing your internal link structure, I recommend this excellent article from Ahrefs:

https://ahrefs.com/blog/internal-links-for-seo/

In terms of 2021 planning, that is going to depend a lot on the opportunities already existing on your site and what is feasible to change. We recommend prioritizing an internal link audit by a qualified professional to identify all opportunities and then prioritize them based on potential impact and ease of implementation. Again, many of these potential improvements will require IT support so the quicker you get them in the queue, the quicker you are likely to see results.

I hope this first article in our 2021 B2B SEO Planning series has provided valuable food for thought on what areas to focus on and prioritize from a technical SEO standpoint going into the new year. SEO is always changing and so are Websites and their technical implementations. It’s important to remember to set aside resources to constantly monitor and resolve SEO technical issues as you plan and budget for the new year. Especially in 2021 where the launch of Core Vitals as a ranking factor is liable to require significant resources.